Follow-up Logic That Looks Right But Fails In Practice

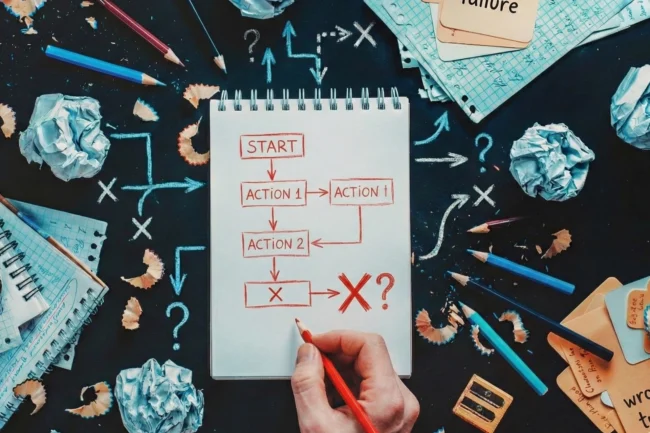

Follow-up is often treated as a solved problem once basic automation exists. Messages are scheduled, conditions are defined, and the logic appears sound on paper. From a distance, the system looks complete.

During implementation, follow-up is one of the first places where clean logic starts to drift once real behavior enters the system. This note captures what tends to surface after launch, when the follow-up flow meets actual usage patterns.

1. The Situation

During build work, follow-up logic is usually defined early and locked in quickly.

A form submits.

A message goes out.

A reminder triggers if no response is recorded.

On the surface, the flow makes sense. Each step has a reason, and the sequence feels complete. Once implemented, the system is technically doing what it was designed to do.

2. Where Things Commonly Break

In practice, follow-up logic often assumes cleaner behavior than reality provides.

- People reply outside expected channels.

- Status changes aren’t recorded consistently.

- Edge cases accumulate quietly, partial replies, verbal confirmations, off-platform responses.

The system keeps following up because, from its perspective, nothing has changed. What was meant to feel attentive starts to feel repetitive, misaligned, or intrusive.

The logic isn’t broken.

The assumptions underneath it are.

3. The Constraint (The Part People Miss)

The constraint is signal reliability.

Follow-up systems depend on accurate, timely signals to know when to stop, pause, or shift behavior. In real environments, those signals are often incomplete, delayed, or never captured at all.

Automation can only react to what it can see. When human actions don’t translate cleanly into system states, follow-up logic loses context fast.

4. How We Handle It in Implementation

During implementation, follow-up is treated as a sensitive interaction layer, not a background process.

We assume that:

- Signals will be messy

- Status changes won’t always be logged perfectly

- Silence does not always mean inaction

This leads to conservative logic, intentional exit points, and a preference for flows that degrade gracefully instead of escalating automatically.

The aim is not maximum persistence.

It’s appropriate persistence under imperfect information.

5. The Resulting Reality

Follow-up feels measured rather than mechanical.

- Fewer awkward overlaps.

- Less accidental pressure.

- Clearer moments where human judgment takes over.

The system supports engagement without pretending it can fully manage it.

6. Quiet Boundary Statement

This only works when follow-up logic is built on top of settled decisions. When intent, ownership, or next steps are unclear, no sequence can compensate for that.

Follow-up failures are rarely visible in testing. They surface slowly, through tone mismatches and subtle friction rather than obvious errors. By the time they’re noticed, trust has already been affected.

That’s why follow-up is handled deliberately during implementation not to perfect the logic, but to respect the limits of what automated systems can reliably infer.